Philadelphia, USA

{ ds3729, tdn47, aa4639, mcs382 }@drexel.edu TL;DR: Synthetic image detectors don't work on synthetically generated videos. We provide empirical evidence as to why and propose ways to adapt existing detectors to work on synthetic videos.

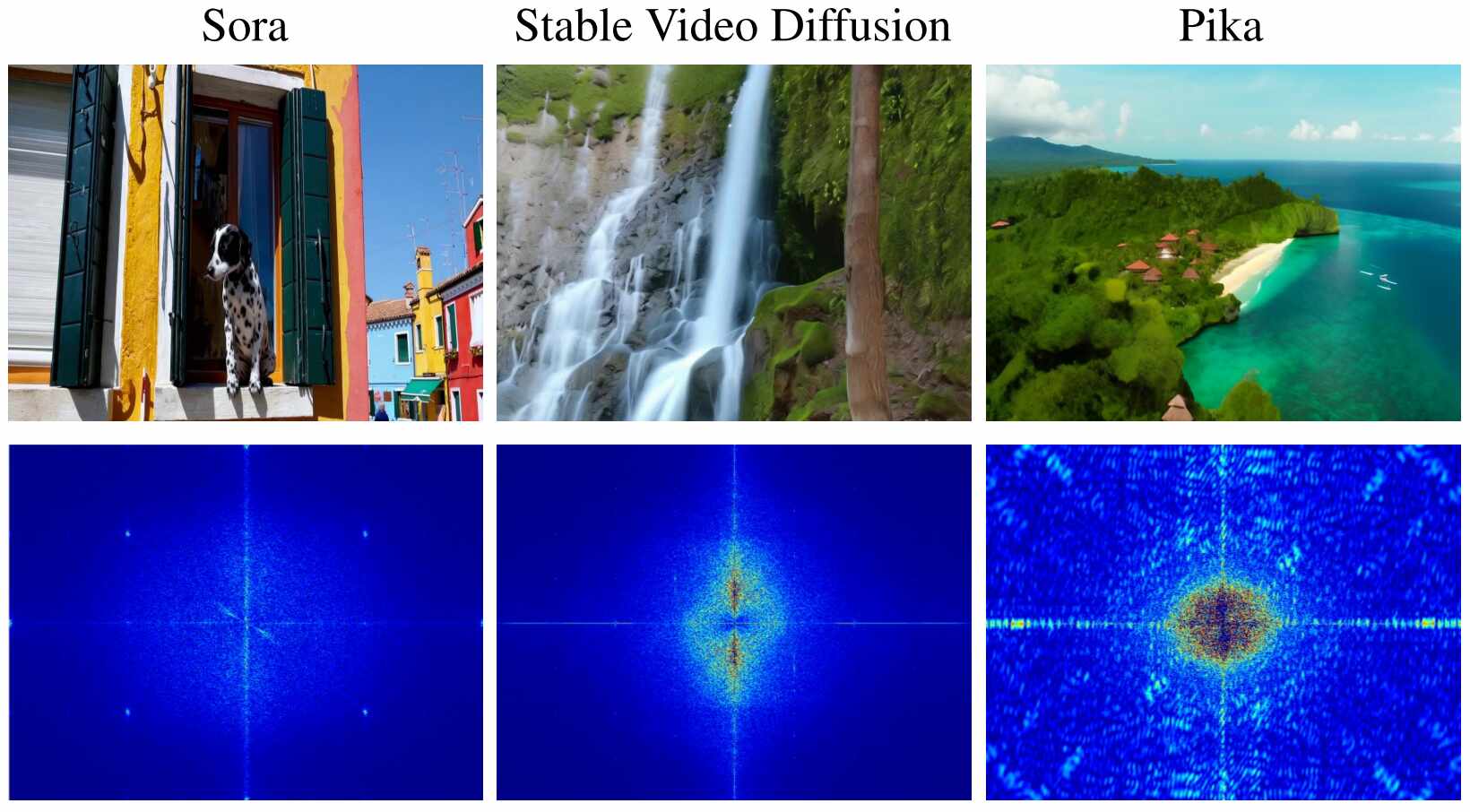

Top row: video frames taken from AI-generated videos. Bottom row: Fourier transforms of the residual forensic traces for each corresponding frame above (what current detectors likely see).

Abstract

Recent advances in generative AI have led to the development of techniques to generate visually realistic synthetic video. While a number of techniques have been developed to detect AI-generated synthetic images, in this paper we show that synthetic image detectors are unable to detect synthetic videos. We demonstrate that this is because synthetic video generators introduce substantially different traces than those left by image generators. Despite this, we show that synthetic video traces can be learned, and used to perform reliable synthetic video detection or generator source attribution even after H.264 re-compression. Furthermore, we demonstrate that while detecting videos from new generators through zero-shot transferability is challenging, accurate detection of videos from a new generator can be achieved through few-shot learning.

Synthetic Image Detectors Don't Work On Videos

Given that a video can be seen as a sequence of images, it is reasonable to expect that synthetic image detectors should be effective at detecting AI-generated synthetic videos. Surprisingly, however, we have found that synthetic image detectors do not successfully identify synthetic videos. Furthermore, we have found that this issue is not primarily caused by the degradation of forensic traces due to H.264 video compression.

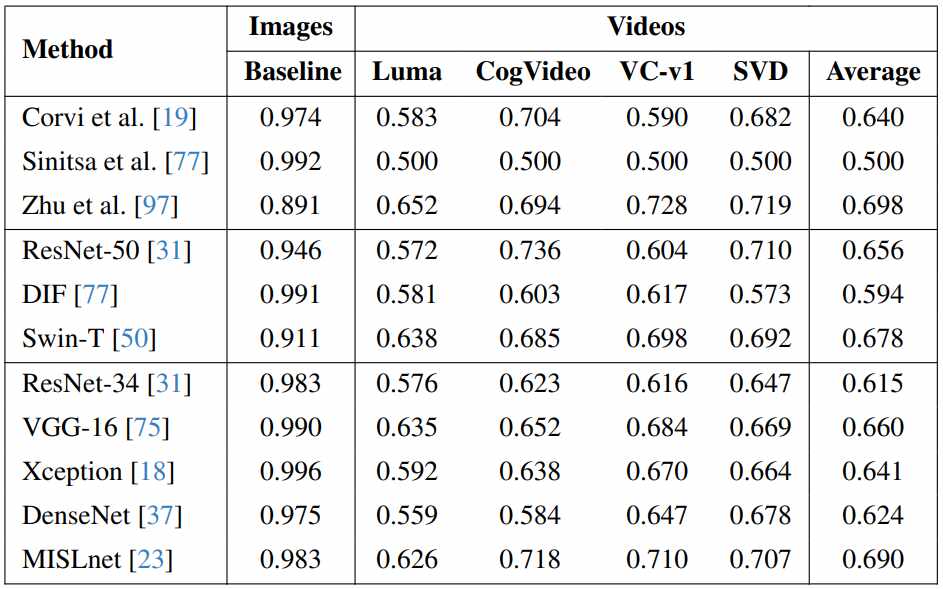

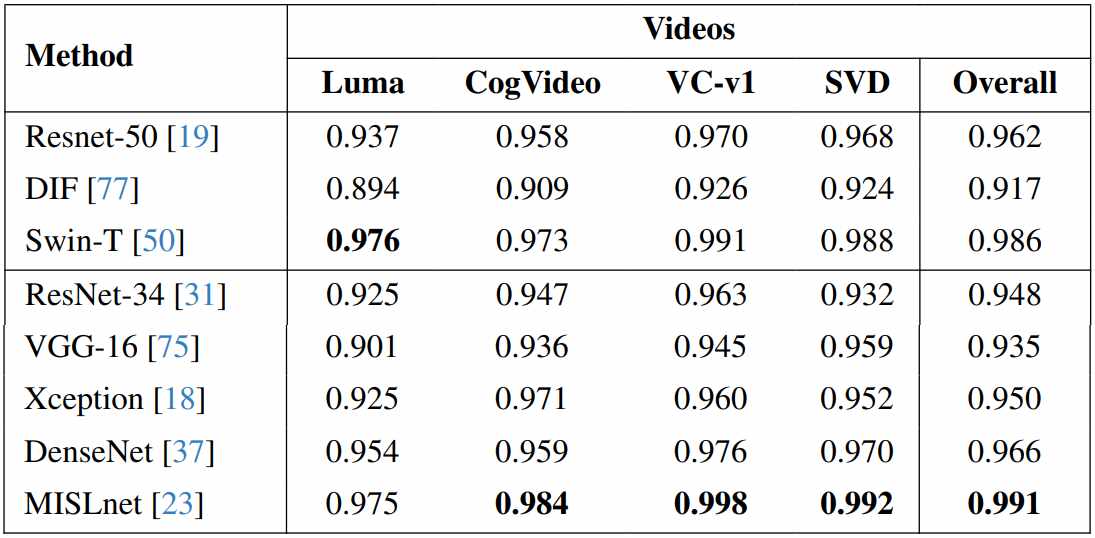

Left: Detection performance of existing synthetic image generators on synthetic videos. Right: Similar to Left but these detectors were robustly trained on H.264 compressed synthetic images.

Synthetic Video Traces

Here, we present evidence that forensic traces in synthetic video are substantially different than those in synthetic images. We qualitatively demonstrate this by visualizing the low-level forensic traces left by a number of different image and video generators. Due to the stark contrasts between forensic traces left by image and video generators, it is highly likely that this is the major reason why synthetic image detectors exhibit substantially lower performance on video. Even when robustly trained, synthetic image detectors learn features to capture forensic traces similar to what they have seen before. Since video traces can be substantially different in nature, synthetic image detectors are not suited to capture these traces.

Fourier transforms of the residual forensic traces for each frame of a synthetic video. The traces are visually distinct from those of synthetic images.

Learning Synthetic Video Traces

Results presented in the previous two sections show that traces left by synthetic video generators are different than those left by image generators, and that synthetic image detectors do not reliably detect these traces. In this section, however, we show that synthetic video traces can be learned. Through a series of experiments, we show that CNNs can be trained to accurately perform synthetic video detection and source attribution. Furthermore, we demonstrate that robust training can improve these detectors even after H.264 re-compression. Additionally, we show how video-level detection can be performed to boost performance over frame-level detection.

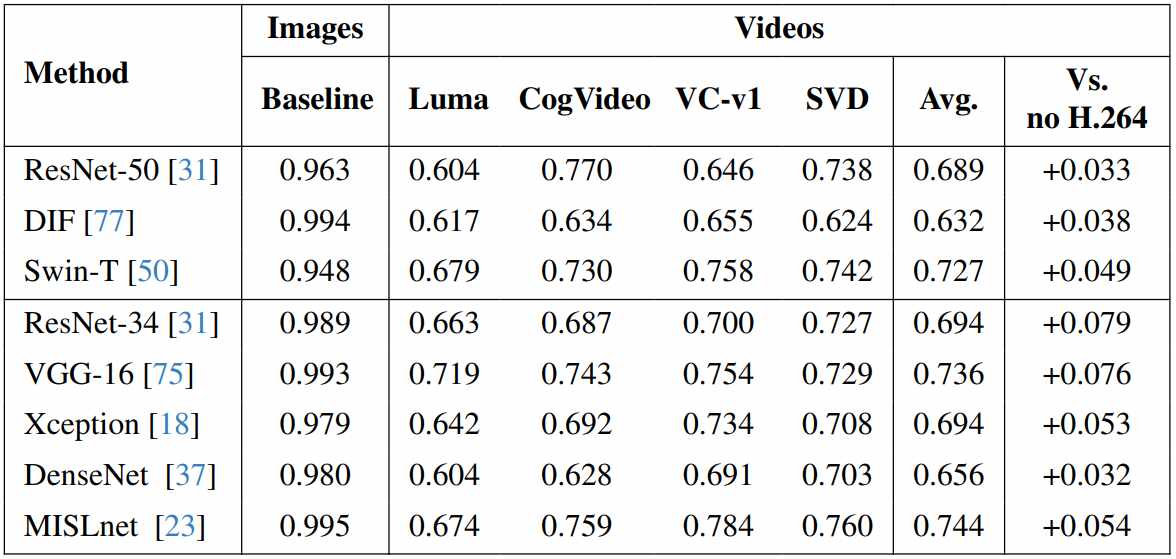

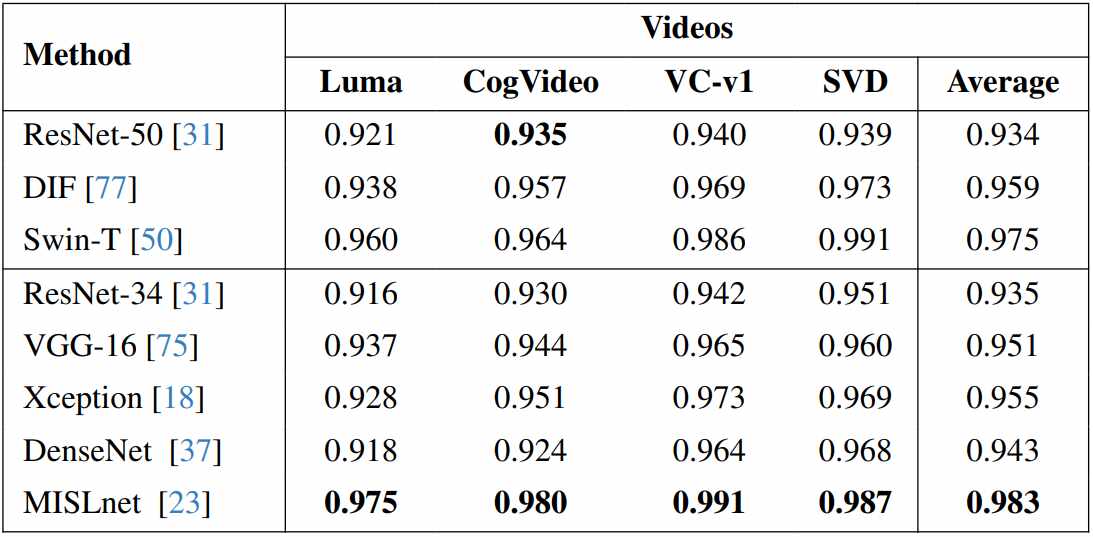

Left: Frame level detection performance of synthetic video detectors. Right: Frame level attribution performance of synthetic video detectors.

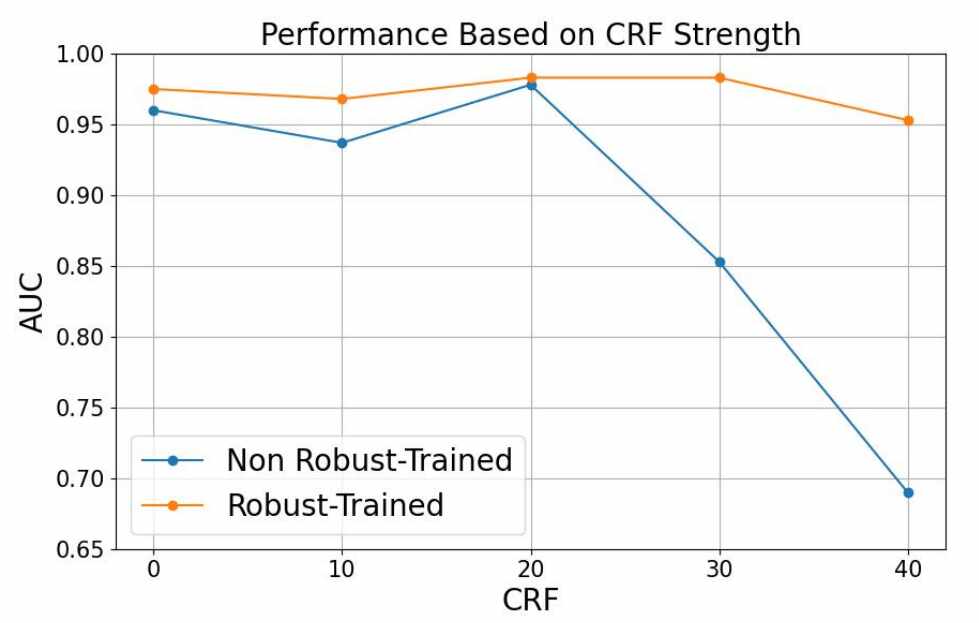

Detection performance of the MISLnet architecture before and after robust-training on real & synthetic videos with Constant Rate Factor (CRF) of 40.

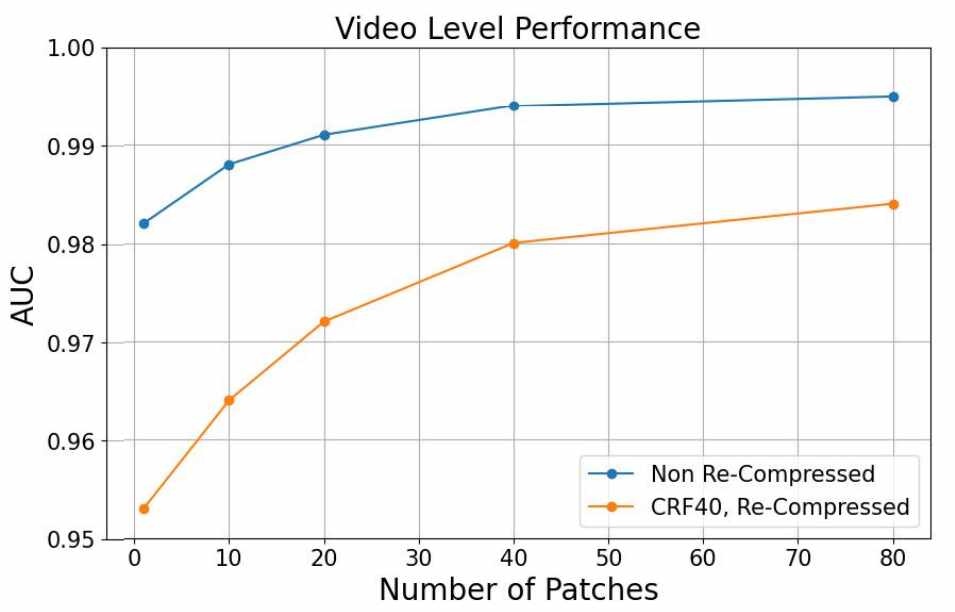

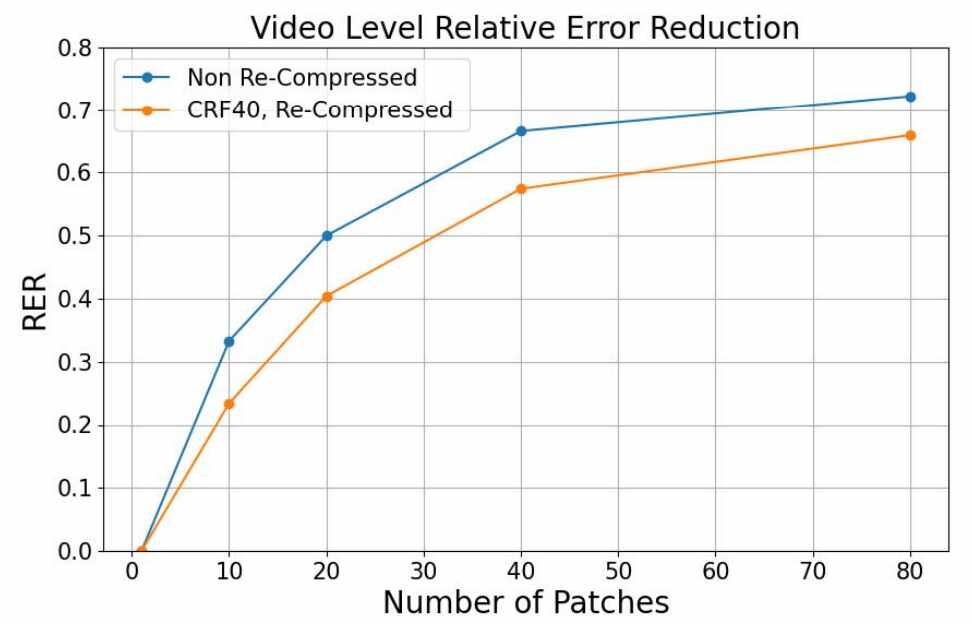

Left: Video-level performance of MISLnet over different number of patches used for obtaining video-level detection score. Right: Relative Error Reduction in video-level performance versus frame-level performance of MISLnet over different number of patches used for obtaining video-level detection score.

Detection Transferability to New Generators

We examined synthetic video detectors' zero-shot transferability performance. This corresponds to a detector's ability to detect videos from a new generator without any re-training. These results show that while the detector achieves strong performance on videos from generators seen during training, performance drops significantly when evaluating on new generators. This is unsurprising because traces left by different generators can vary substantially. As a result, it is difficult for a detector to capture traces from new generation that leave different traces than those seen in training.

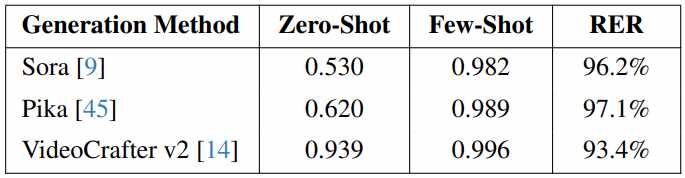

Additionally, we performed few-shot learning experiments to evaluate the detector's ability to detect videos from new generators with only a few examples. These results show that the detector can very accurately transfer to detect new generators through few-shot learning. This is particularly important given the rapid pace with which new generators such as Sora are emerging.

Left: Zero-shot detection performance of MISLnet, which was trained on 3 out of 4 synthetic video generation sources and test on the remaining one. Performance numbers are in AUC. Right: Zero-Shot and Few-Shot detection performance of MISLnet, which was trained on all training generators, and tested on new generation sources. Performance numbers are measured using AUC and RER.

Bibtex

@InProceedings{Vahdati_2024_CVPR,

author = {Vahdati, Danial Samadi and Nguyen, Tai D. and Azizpour, Aref and Stamm, Matthew C.},

title = {Beyond Deepfake Images: Detecting AI-Generated Videos},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops},

month = {June},

year = {2024},

}